From Targets to Co-Designers: Empowering Gen Z in the Age of AI

Gen Z is the first generation to grow up entirely within an AI-powered world. From school apps that track their attention spans to platforms that curate their every scroll, they’ve been nudged, measured, and profiled by algorithms long before most could give meaningful consent. And in many cases, the systems shaping their lives weren’t built with them in mind – they were built about them, for them, but never with them.

If we want to protect and empower young people in the digital world, we have to stop treating them as passive users or data points, and instead start treating them as co-designers of the systems that increasingly define their reality.

From Surveillance to Support

Too many tools aimed at youth rely on surveillance by default. EdTech platforms monitor keystrokes and eye movements. Social media apps mine moods and friendships. Even wellbeing tools can collect data far beyond what’s necessary.

But it doesn’t have to be this way. We can build systems that support young people without stalking them.

This is where the concept of “know nothing by design” comes in. Rather than collect all the data “just in case,” we can design tools that collect the minimum required – or, even better, none at all – while still delivering value.

It’s not a radical idea. We don’t expect our microwaves to report what we’re heating to advertisers. Why should a school app need to know where a teenager is at all times?

The future of safety shouldn’t mean trading away privacy. It means building systems that forget, not just ones that collect.

Consent Isn’t Just a Click

Ask a young person what they’ve agreed to when they sign up for a new app, and chances are, they won’t be sure. Not because they’re careless – but because most consent mechanisms aren’t built to inform. They’re built to comply.

Click “accept,” and you’re in. But what happens when you change your mind?

That’s the piece we’re missing – the ability to withdraw consent as easily as we give it.

If we’re serious about digital privacy, especially for young people, then consent must be:

- Understandable: No more 40-page terms and conditions.

- Manageable: Users should be able to see and edit what they’ve agreed to.

- Revocable: There must be a clear path to undo consent and remove data.

Where is the “undo” button for your image, your metadata, your teenage thoughts stored in a platform’s database? In most systems, it doesn’t exist.

That’s not consent — it’s capture.

Designing for revocable consent is a small shift with massive impact. It turns users from passive subjects into people with ongoing, active choices.

AI Literacy is Digital Safety

From algorithmic bias to deepfakes and manipulated media, AI now shapes what Gen Z sees, believes, and even how they behave.

And yet, most schools still treat AI literacy as a fringe topic, if they teach it at all.

Digital resilience today means more than strong passwords. It means helping young people understand:

- How recommendation engines work

- How personal data feeds predictive systems

- How to question what they see — especially when it looks “too real”

We don’t just need to teach coding. We need to teach critical thinking about AI. Media literacy is no longer enough. We need to prepare young people not just to use AI, but to question it, challenge it, and understand its impacts

From Data Subjects to Co-Designers

Perhaps the most important shift we need to make is in who gets to shape the systems.

Right now, Gen Z is the target audience – but rarely a design partner. They’re studied, tested, and optimised for, but their lived experience is rarely brought into the process.

If we want systems that reflect young people’s needs, values, and boundaries, we need to build them with young people, not just for them.

This also means confronting who benefits from their data. It’s time to talk about the decolonisation of personal data.

Big platforms profit from the digital footprints of young users – their attention, behaviour, and preferences become raw materials for advertising, product development, and corporate gain. But where is the return for the user?

Data belongs to the person it came from. That principle must guide how we regulate, design, and monetise digital platforms – especially those aimed at youth.

Where Do We Go from Here?

Empowering Gen Z in the age of AI means more than protecting them. It means respecting them – as thinkers, as citizens, and as collaborators.

To do that, we must:

- Build privacy-respecting tools that don’t rely on constant tracking

- Treat consent as ongoing and revocable, not a one-time checkbox

- Embed AI literacy into education, not as an optional extra but as a core skill

- Include young people in the design, governance, and regulation of AI systems

- Challenge data colonialism by putting control back in the hands of the data owner

Responsible AI isn’t just about making smarter code. It’s about building smarter communities – where everyone, especially young people, has a say in the systems shaping their future.

Want to Go Deeper? Join the Conversation.

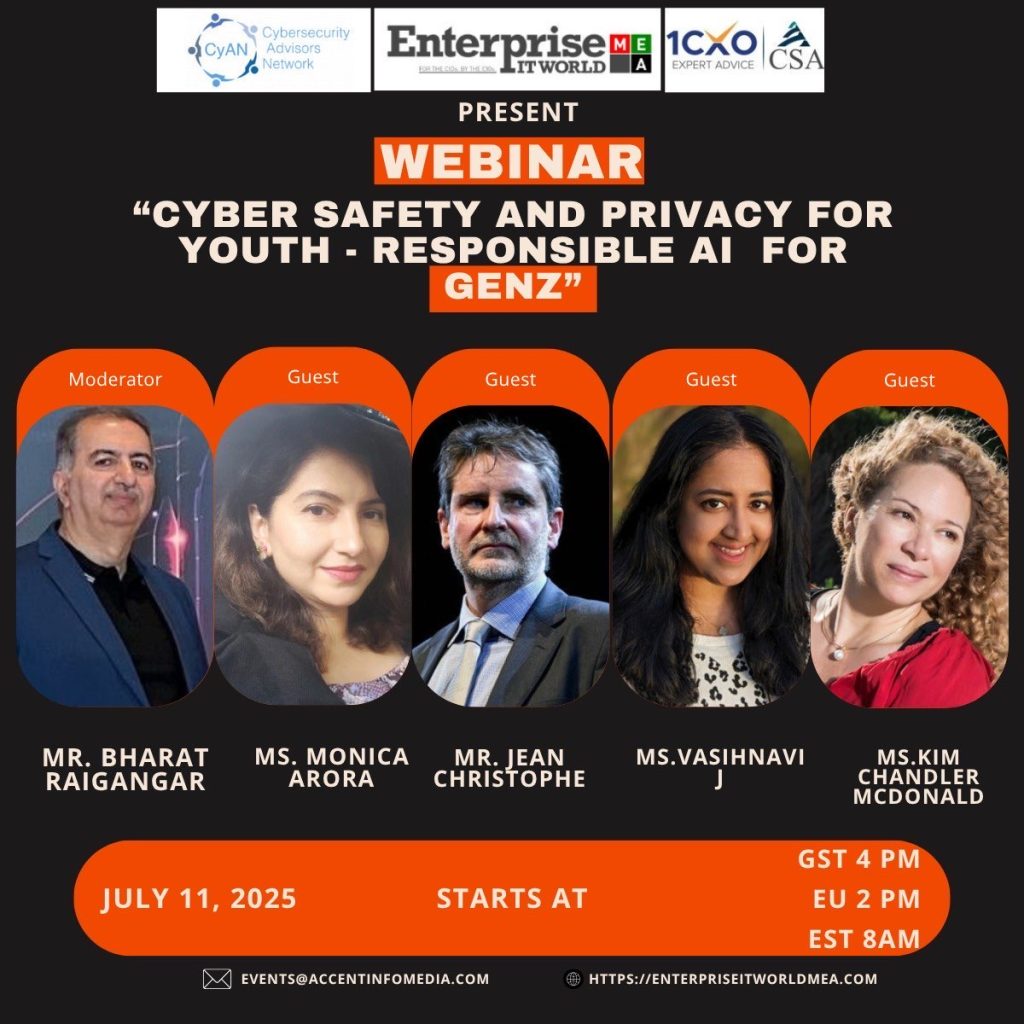

If this topic resonates with you — whether you’re a young person, a parent, an educator, a policymaker, or someone working in tech — I invite you to join our live panel discussion: “Cyber Safety and Privacy for Youth: Responsible AI for Gen Z” 🗓️ 11 July 2025 🕑 2pm CET 📍

Hosted by Cybersecurity Advisors Network (CyAN) in collaboration with Enterprise IT World, and 1CxO CSA, this webinar features panelists Vaishnavi J, Monica Arora, Jean-Christophe (J-C) Le Toquin and myself, with Bharat Raigangar moderating. Together, we’ll unpack the risks, responsibilities, and opportunities of raising a generation in an AI-powered world – and explore how we can all help shape safer, more empowering systems.

ZOOM Link Registration: https://us06web.zoom.us/webinar/register/WN_cWGD-E5NQke1lwXVrofYOw#/registration

About the Author:

Kim Chandler McDonald is the Co-Founder and CEO of 3 Steps Data, driving data/digital governance solutions.

She is the Global VP of CyAN, an award-winning author, storyteller, and advocate for cybersecurity, digital sovereignty, compliance, governance, and end-user empowerment.