Disinformation and AI – a Growing Challenge

I recently had the pleasure of joining Dr. Egor Zakharov of the AIT Lab at the Swiss Federal Polytechnic University, Zurich (ETHZ) for a fireside chat at the ITBN conference in Budapest, Hungary.

Egor is an accomplished researcher and author on the topic of AI-generated video content, and an avowed futurist, with a highly optimistic outlook for the applications and value of increasingly intelligent and capable video technologies. From remote meeting participation avatars to aged care, there seems to be no limit to how he predicts artificial intelligence can benefit human interaction.

I aimed to engage him in a discussion around these technologies potential applications in a more concerning, darker area – that of online disinformation. Coordinated social media disinformation campaigns are an established phenomenon, whether it’s organised state-/state-affiliated actors like the Internet Research Agency (aka Trolls from Olgino) and China’s 50 Cent Party, or informal trolling groups à la 4chan. Regardless of what a group’s motivations are, the techniques of manipulating and spoiling online discourse and undermining or redirecting institutions and the public’s trust in them has been a part of politics since well before Russian influence in the 2016 US presidential elections, the Brexit referendum, or any number of other major social, economic, and political upheavals involving significant disinformation.

Egor and I agreed that trolls will increasingly rely on ever-more-accessible and -sophisticated AI, whether for identifying patterns of online discourse and opportunities for sowing chaos, generating content, or smart automation of information dissemination.

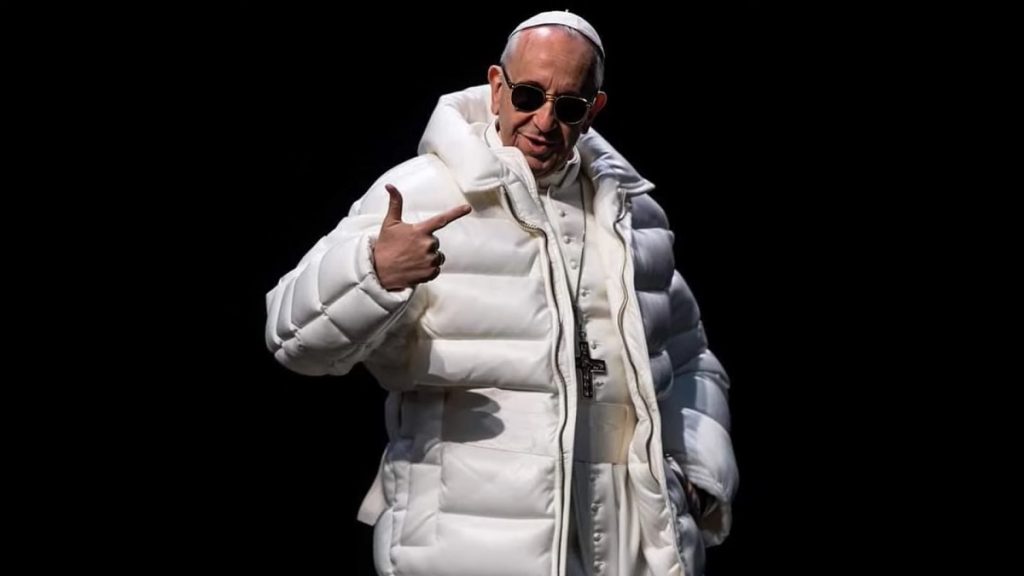

For example, AI-created avatars and even live-deepfake video can make disingenuous propaganda and outright lies much more believable; do you really know it’s not Pope Francis laying down phat beats, or calling for the mass murder of <insert population group here>?

AI can make establishment and management of online personas more believable, while currently fake troll usernames are often easy to spot on platforms like reddit due to their behavior and even name format. AI-created content will take image and sound fakery to new levels, by allowing the trolls to create, say, counterfeit mainstream media newsfeeds.

A mitigating factor is that large language models (LLMs) can, at least for now, help detect these. A common trope among those mocking AI-generated content is the frequent inability of tools like Midjourney to get details like hands “right”. Anyone who’s ever found themselves horribly irritated by the auto-generated voiceovers of TikTok videos can attest to how much AI content currently falls squarely into the uncanny valley – too realistic to be likeable, not good enough to be relatable, and right on the money for creepy.

This will change. But even as AI-spawned fakes become more credible and realistic, so can detection mechanisms be improved, by spotting patterns and attributes typical for AI.

Unfortunately, this arms race will eventually be won by the creators – although when this level of media perfection will take place, nobody can tell. What is clear, we were completely aligned on, is that when that point arrives, we’d better be ready with other ways of detecting and dealing with fake disinformation content – if not, we’re sure to be in a world of trouble.

One interesting concern raised by Egor was the growing availability of AI tools. As he pointed out, disinformation campaigns currently require a lot of resources to plan, coordinate, and operate. Monitoring online discussions and trends, creating messaging, or “contributing” to discussions in a manner subtle enough to not raise too many suspicions by good-faith participants takes knowledge and people. As such, a campaign powerful enough to have significant political impact is likely to require the kind of capabilities usually associated with state actors – militaries, intelligence agencies, and state-affiliated paramilitary groups – e.g. Wagner Group, or the Iranian Revolutionary Guard Corps.

With increasingly portable, easy-to-use AI resources, a far wider range of bad actors could hypothetically cause havoc – including stock market manipulation, local election interference, even personalised defamation campaigns, not to speak of planning and running targeted and sophisticated cyberattacks against even smaller victims. This would make such “portable AI” the conceptual equivalent of the terrorist suitcase nuke that cause so much concern among law enforcement and intelligence agencies from the 1990s onward, albeit that danger thankfully never came anywhere near its anticipated potential.

On the positive side, AI is incredibly and increasingly resource-intensive, consuming vast amounts of electricity, water, and computing power (echoes of crypto-miners, anyone?) Even with more efficient algorithms and hardware, there are limits, so it’s conceivable that smaller bad actors just won’t have the wherewithal to deploy such tools effectively. Time will tell.

What’s clear is that regulation alone is not enough, nor is education around disinformation techniques sufficient. The world has seen a constant erosion of trust in institutions. Traditional media outlets, governments, even basic science and education have been undermined, not least by disinformation actors seeking to exacerbate societal divisions. This makes it difficult to defend and fight back against the trolls – much more so when they become equipped with ever-smarter tools.

We agreed that what is needed is a holistic approach, incorporating a better-informed populace, consistent vigilance against, and correction of, disinformation campaigns, holding social media platforms accountable for weak detection and countermeasures campaigns – and most importantly, a constructive, coordinated use of AI detection, decision-making, generation, and automation tools by the “good guys” to help mitigate the impacts of AI in evil hands.